本文参考《Google机器学习速成课程》验证

点击下载源码

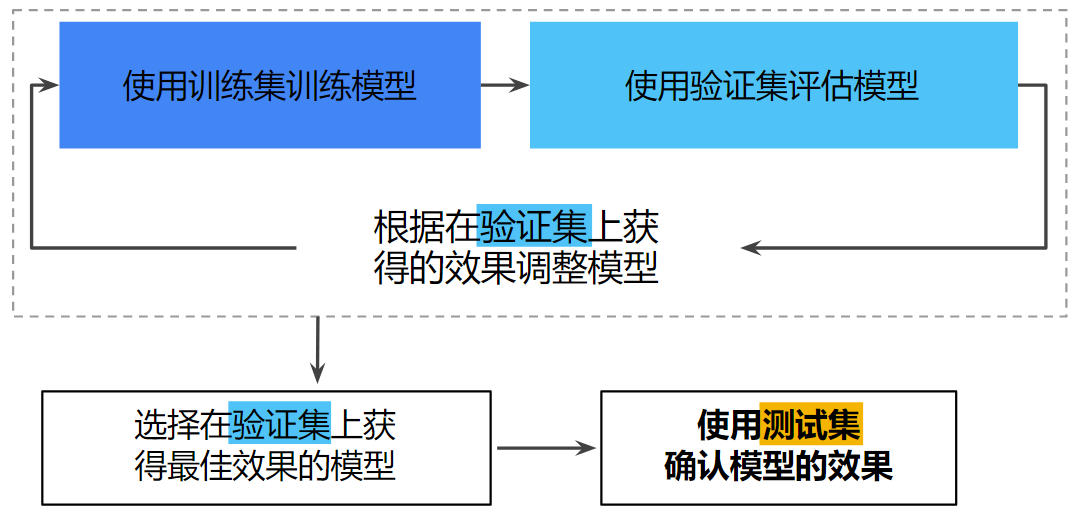

交叉验证分为:

- 简单交叉验证

- S折较差验证

- 留一交叉验证

import math

from IPython import display

from matplotlib import cm

from matplotlib import gridspec

from matplotlib import pyplot as plt

import numpy as np

import pandas as pd

from sklearn import metrics

import tensorflow as tf

from tensorflow.python.data import Dataset

tf.logging.set_verbosity(tf.logging.ERROR)

pd.options.display.max_rows = 10

pd.options.display.float_format = '{:.1f}'.format

#加载并准备数据

california_housing_dataframe = pd.read_csv("https://storage.googleapis.com/mledu-datasets/california_housing_train.csv", sep=",")

#对数据进行随机化处理

california_housing_dataframe = california_housing_dataframe.reindex(

np.random.permutation(california_housing_dataframe.index))

#对多个特征进行预处理

def preprocess_features(california_housing_dataframe):

selected_features = california_housing_dataframe[

["latitude",

"longitude",

"housing_median_age",

"total_rooms",

"total_bedrooms",

"population",

"households",

"median_income"]]

processed_features = selected_features.copy()

# Create a synthetic feature.

processed_features["rooms_per_person"] = (

california_housing_dataframe["total_rooms"] /

california_housing_dataframe["population"])

return processed_features

#对目标特征进行预处理

def preprocess_targets(california_housing_dataframe):

output_targets = pd.DataFrame()

# Scale the target to be in units of thousands of dollars.

output_targets["median_house_value"] = (

california_housing_dataframe["median_house_value"] / 1000.0)

return output_targets

#检查数据,训练集

training_examples = preprocess_features(california_housing_dataframe.head(12000))

training_examples.describe()

training_targets = preprocess_targets(california_housing_dataframe.head(12000))

training_targets.describe()

#检查数据,验证集

validation_examples = preprocess_features(california_housing_dataframe.tail(5000))

validation_examples.describe()

validation_targets = preprocess_targets(california_housing_dataframe.tail(5000))

validation_targets.describe()

#绘制纬度/经度与房屋价值中位数的曲线图

plt.figure(figsize=(13, 8))

ax = plt.subplot(1, 2, 1)

ax.set_title("Validation Data")

ax.set_autoscaley_on(False)

ax.set_ylim([32, 43])

ax.set_autoscalex_on(False)

ax.set_xlim([-126, -112])

plt.scatter(validation_examples["longitude"],

validation_examples["latitude"],

cmap="coolwarm",

c=validation_targets["median_house_value"] / validation_targets["median_house_value"].max())

ax = plt.subplot(1,2,2)

ax.set_title("Training Data")

ax.set_autoscaley_on(False)

ax.set_ylim([32, 43])

ax.set_autoscalex_on(False)

ax.set_xlim([-126, -112])

plt.scatter(training_examples["longitude"],

training_examples["latitude"],

cmap="coolwarm",

c=training_targets["median_house_value"] / training_targets["median_house_value"].max())

_ = plt.plot()

#数据输入函数

def my_input_fn(features, targets, batch_size=1, shuffle=True, num_epochs=None):

# Convert pandas data into a dict of np arrays.

features = {key:np.array(value) for key,value in dict(features).items()}

# 将数据切片建立Dataset

ds = Dataset.from_tensor_slices((features,targets)) # warning: 2GB limit

# 将batch_size个切片组合,不能整除的最后一段保留

# tf.contrib.data.batch_and_drop_remainder(batch_size)最后一段舍弃

ds = ds.batch(batch_size).repeat(num_epochs)

# Shuffle the data, if specified

if shuffle:

#buffer_size, seed=None, reshuffle_each_iteration=None(每次迭代后乱序,默认True)

ds = ds.shuffle(10000)

# Return the next batch of data

features, labels = ds.make_one_shot_iterator().get_next()

return features, labels

#特征结构函数

def construct_feature_columns(input_features):

#目前仅有数值特征

return set([tf.feature_column.numeric_column(my_feature)

for my_feature in input_features])

#训练函数

def train_model(

learning_rate,

steps,

batch_size,

training_examples,

training_targets,

validation_examples,

validation_targets):

periods = 10

steps_per_period = steps / periods

# Create a linear regressor object.

my_optimizer = tf.train.GradientDescentOptimizer(learning_rate=learning_rate)

my_optimizer = tf.contrib.estimator.clip_gradients_by_norm(my_optimizer, 5.0)

linear_regressor = tf.estimator.LinearRegressor(

feature_columns=construct_feature_columns(training_examples),

optimizer=my_optimizer

)

# Create input functions.

training_input_fn = lambda: my_input_fn(

training_examples,

training_targets["median_house_value"],

batch_size=batch_size)

predict_training_input_fn = lambda: my_input_fn(

training_examples,

training_targets["median_house_value"],

num_epochs=1,

shuffle=False)

predict_validation_input_fn = lambda: my_input_fn(

validation_examples, validation_targets["median_house_value"],

num_epochs=1,

shuffle=False)

# Train the model, but do so inside a loop so that we can periodically assess

# loss metrics.

print "Training model..."

print "RMSE (on training data):"

training_rmse = []

validation_rmse = []

#训练10个周期

for period in range (0, periods):

# 训练模型

linear_regressor.train(

input_fn=training_input_fn,

steps=steps_per_period,

)

# 计算预测值

training_predictions = linear_regressor.predict(input_fn=predict_training_input_fn)

training_predictions = np.array(item['predictions' for item in training_predictions])

validation_predictions = linear_regressor.predict(input_fn=predict_validation_input_fn)

validation_predictions = np.array(item['predictions' for item in validation_predictions])

# 计算训练集和验证集误差

training_root_mean_squared_error = math.sqrt(

metrics.mean_squared_error(training_predictions, training_targets))

validation_root_mean_squared_error = math.sqrt(

metrics.mean_squared_error(validation_predictions, validation_targets))

# 输出

print " period %02d : %0.2f" % (period, training_root_mean_squared_error)

# Add the loss metrics from this period to our list.

training_rmse.append(training_root_mean_squared_error)

validation_rmse.append(validation_root_mean_squared_error)

print "Model training finished."

# 对于每个周期的误差信息绘图

plt.ylabel("RMSE")

plt.xlabel("Periods")

plt.title("Root Mean Squared Error vs. Periods")

plt.tight_layout()

plt.plot(training_rmse, label="training")

plt.plot(validation_rmse, label="validation")

plt.legend()

return linear_regressor

linear_regressor = train_model(

learning_rate=0.00003,

steps=500,

batch_size=5,

training_examples=training_examples,

training_targets=training_targets,

validation_examples=validation_examples,

validation_targets=validation_targets)

#使用测试集评估

california_housing_test_data = pd.read_csv("https://storage.googleapis.com/mledu-datasets/california_housing_test.csv", sep=",")

test_examples = preprocess_features(california_housing_test_data)

test_targets = preprocess_targets(california_housing_test_data)

predict_test_input_fn = lambda: my_input_fn(

test_examples,

test_targets["median_house_value"],

num_epochs=1,

shuffle=False)

test_predictions = linear_regressor.predict(input_fn=predict_test_input_fn)

test_predictions = np.array(item['predictions' for item in test_predictions])

root_mean_squared_error = math.sqrt(

metrics.mean_squared_error(test_predictions, test_targets))

print "Final RMSE (on test data): %0.2f" % root_mean_squared_error

![[TF.2]合成特征和离群值](https://blog.mclover.cn/wp-content/themes/slanted/img/thumb-medium.png)